Machine Learning

The event that forced Galton to question his beliefs also illustrates what makes ensembles so powerful: If you have different and independent models, trained using different parts of data for the same problem, they will work better together than individually. The reason? Each model will learn a different part of the concept. Therefore, each model will produce valid results and errors based on its “knowledge.”

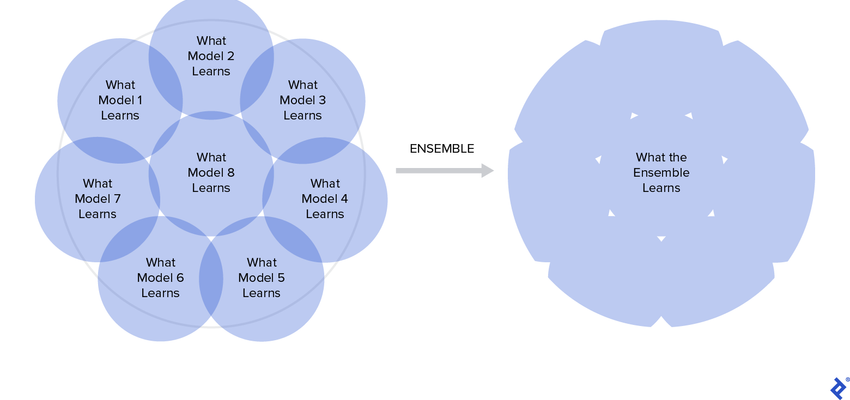

But the most interesting thing is that each true part will complement the others while the errors cancel out each other:

You need to train models with high variance (like decision trees) over distinct subsets of data. This added variance means that each model overfits different data, but when combined, the variance disappears, as if by magic. This creates a new, more robust model.

Just like in Galton’s case, when all data from all sources is combined, the result is “smarter” than isolated data points.